Zero dataset to a trained AI model in < 15 minutes with ONESTEPAI and STABILITY AI

This blog is for those interested in quickly creating their own Vision-based AI models, without coding and no AI knowledge .

Synthetic datasets and AutoML yield rapid AI model builds

While the title is a bit of a tease, the reality is that recent progress in AI land is drastically lowering the barriers to pursuing your own AI applications.

So you say we have WHAT in the backyard?

My spouse ❤️ had recently installed some cameras along our backyard fence and wanted to record wildlife’s daily and nightly traffic on top of the fence.

We previously had the opportunity to observe a caravan of raccoons, squirrels, and the occasional mouse going around day and night (for some). We even started to give names to the possum and a couple of distinctive squirrels.

Now you want me to do WHAT?

My spouse was tired of hearing my report on all these new AI advancements, so she presented me with a new challenge 😆. Be helpful.

It started as I was tasked to build a (more rare) raccoon 🦝 detector application that my wife could run on our smart cameras 📷.

I accepted the challenge and wanted to give it a quick go. Around the same time, my friends at ONESTEPAI were getting ready to launch their new IoT AutoML solution, which triggered some further thoughts.

After a quick discussion with their head of business Mariusz, they agreed to give me early access to their portal.

I was to use their online “dataset manipulator” and their “automated classifier builder” toward building that “raccoon detector” AI model.

The creation of AI models for vision applications still has daunting requirements: - Lots of images to assemble (can be time-consuming) - GPU to train (can be expensive) - The knowledge to connect the two

My somewhat experimental approach makes things a lot easier.

So, I investigated combining two compelling technologies that don’t require much-specialized knowledge:

Stable Diffusion for building large datasets

IoT AutoML for training an AI model

Stable Diffusion was recently released by STABILITY AI and is responsible for a “Cambrian explosion of creativity” in many vision-based applications, including “AI ART”.

In our case, we show that Stable Diffusion is also excellent at generating never before seen, high-fidelity images for these wild creatures.

In this blog, I elaborate on this surprisingly effective approach and introduce some online services that might help those with similar requirements.

Building a large, high-fidelity dataset, quickly.

I wanted to move quickly and spend only a little time searching and reviewing what might be available as a seed dataset. We’ll get back to that in a separate post.

Instead, the thought was to create a new dataset from scratch with pictures I did not need to buy or be encumbered with potential copyright issues.

Also, from recent experience using several “text to image” programs, I discovered that creating new images was now relatively quick, even on older low-cost GPUs (>= 4GB VRAM).

It turns out that ONESTEPAI has virtual GPUs they rent, and I was offered a 4GB V100 to create the datasets.

“Stable Diffusion” hosted at OSAI

To generate these datasets, I installed “Stable Diffusion” from stability.ai

Using a GUI, you enter simple text prompts and create tens of images in short order. Here is a text prompt of “raccoon sitting on top of a fence” :

And another example is with “possum sitting on top of a fence” as a text prompt.

Not bad, hey? I swear I saw these two last night, except now I have a daytime picture of each 😆.

All right, now the fun can start. I will create the following dataset and maybe more:

- Raccoon __(300 :raccoon: images)

- Coyote___(300 🐺 images)

- Javelina___(300 🐷 images)

- Possum___(300 🐀 images)

- Squirrel___(300 🐿 images)

- others …..

Yes, beyond the initial “Raccoon” :raccoon: dataset, I added a few more classes as I was reminded that we have pretty healthy wildlife.

Also, after an earlier successful attempt with the “Raccoon” dataset, it was suggested 🍯 to add the “Coyote” 🐺 and “Javalina” 🐷 datasets as they are representative of what shows up in my in-laws’ backyard in Arizona. More fun for me 🆗

As you discover the new “image creation” superpowers, you will assemble more extensive training datasets and expect to spend more than 15 minutes doing that.

But still, my first attempt went from no “raccoon” pictures to a trained classifier working in a cloud-hosted web app registered in less than 15 minutes!

So, equipped with your new Stability Diffusion superpowers, it takes just a few minutes to generate hundreds of pictures. I had to discard a few, but most images provide a credible rendering of the desired animal.

IoT AutoML model training at ONESTEP AI

As I was feeling satisfied with the overall quality and size of the generated image datasets, it was time to upload them to the ONESTEPAI portal.

ONESTEP AI has tools on their portal to “augment” the number of images used during the training process and make the whole training fully automated and painless, especially for users with No AI background.

Time to shape the datasets on the ONESTEPAI portal

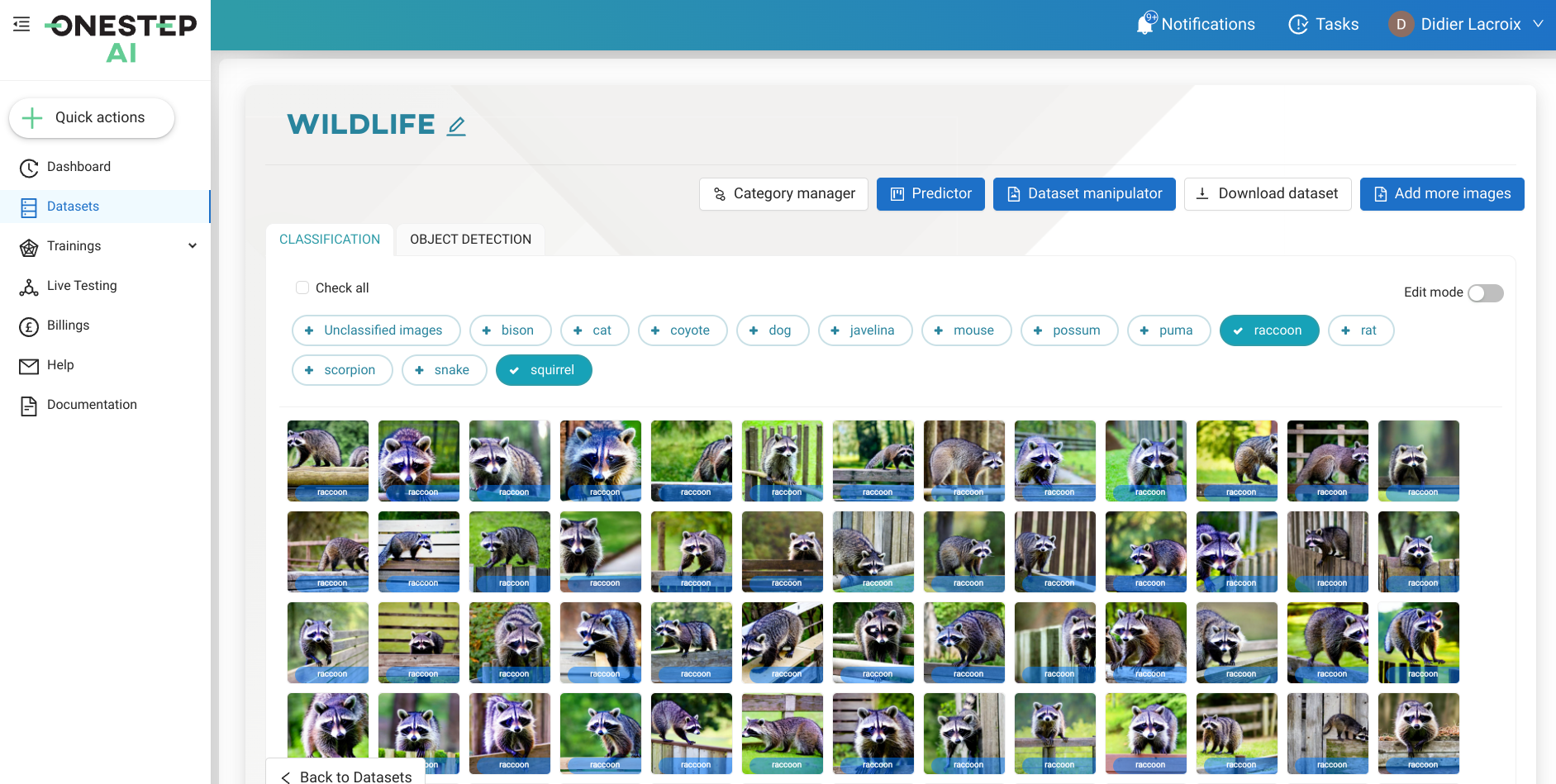

The picture below is a quick pick into the new ONESTEPAI data manipulator

There, I could upload, review, annotate and combine several datasets to feed into their AutoML training pipeline.

Quickly trained model

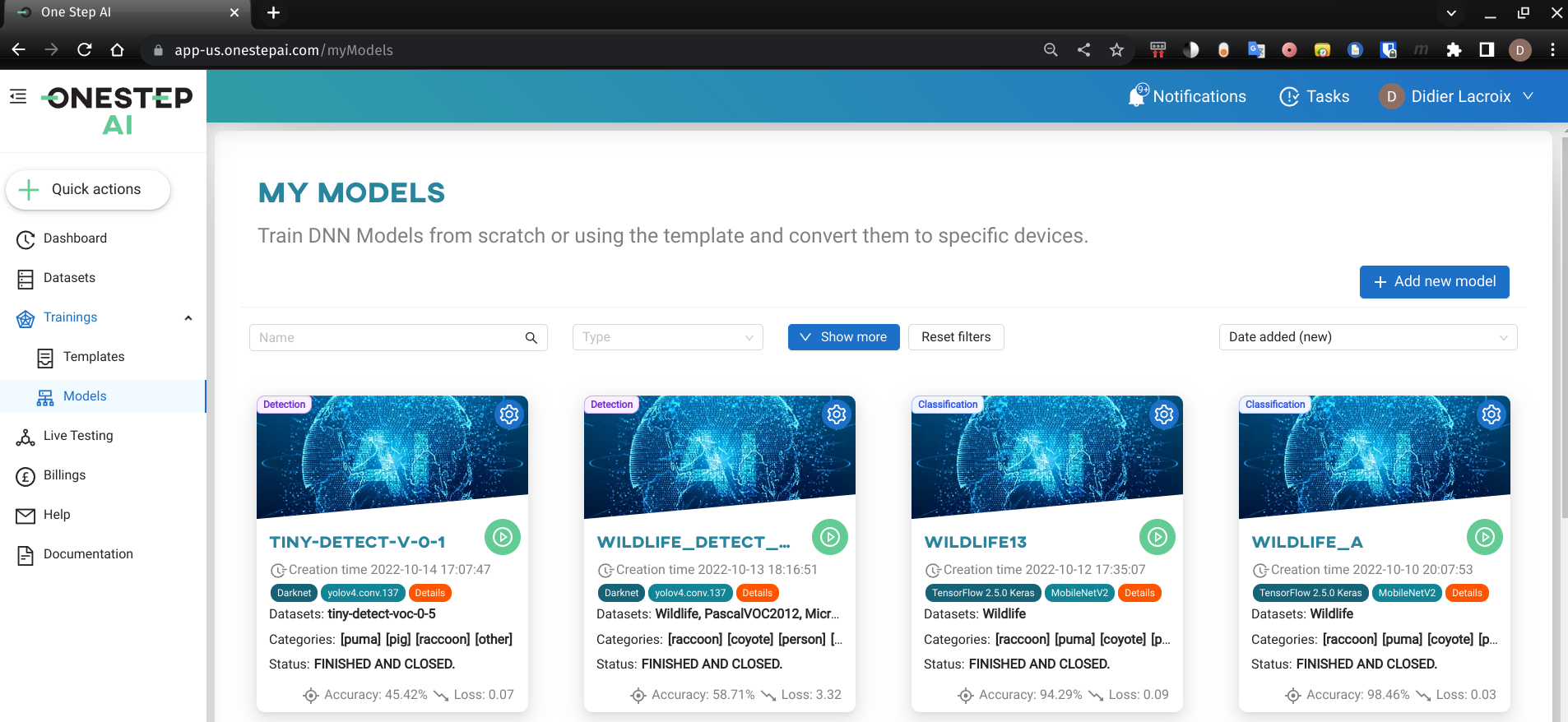

After combining all the datasets, it was time to create the model. As you see below, it is effortless and fast to get quickly several models trained.

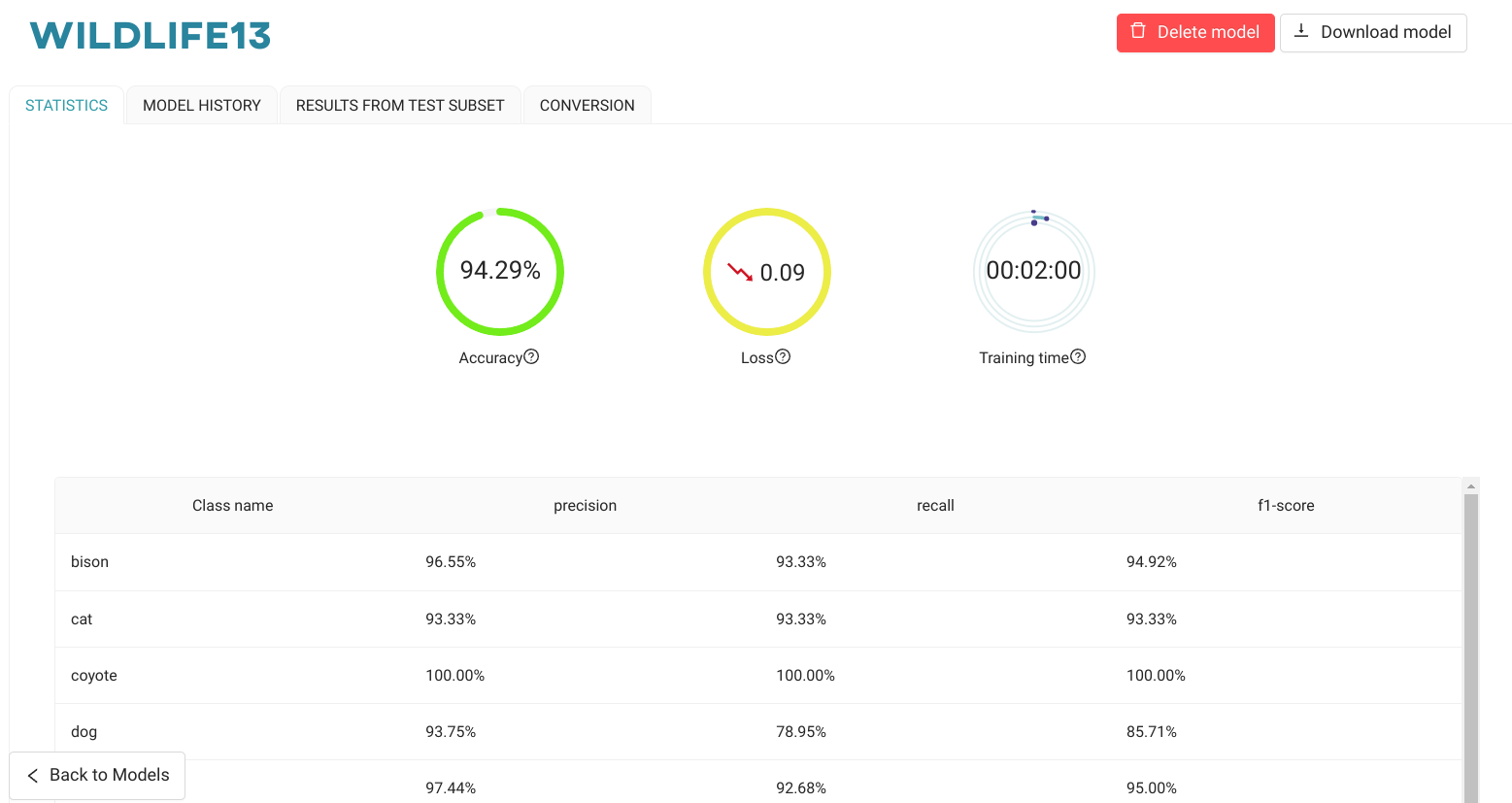

After a training session, ONESTEPAI provides an excellent summary and makes further debugging information available to improve the model’s accuracy.

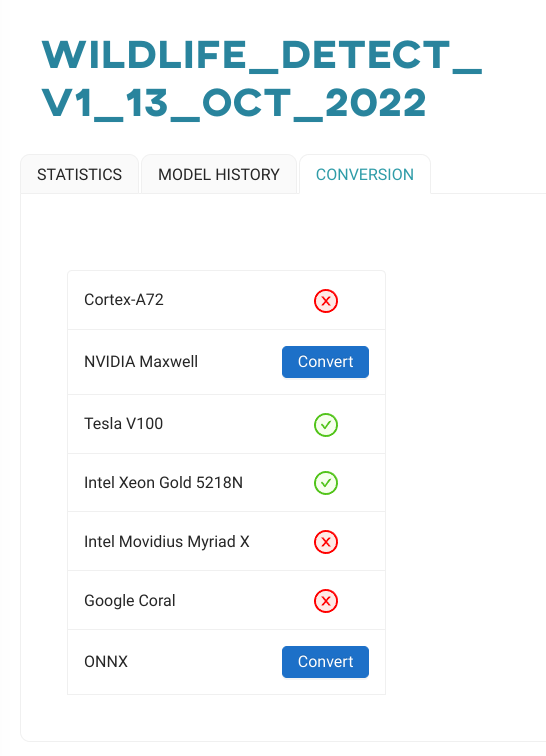

IoT model conversion

The last step at ONESTEPAI was for them to convert the trained model. I eventually selected the Google Coral EdgeTPU as a target and, again, very quickly, could download the trained model.

Your own Web application

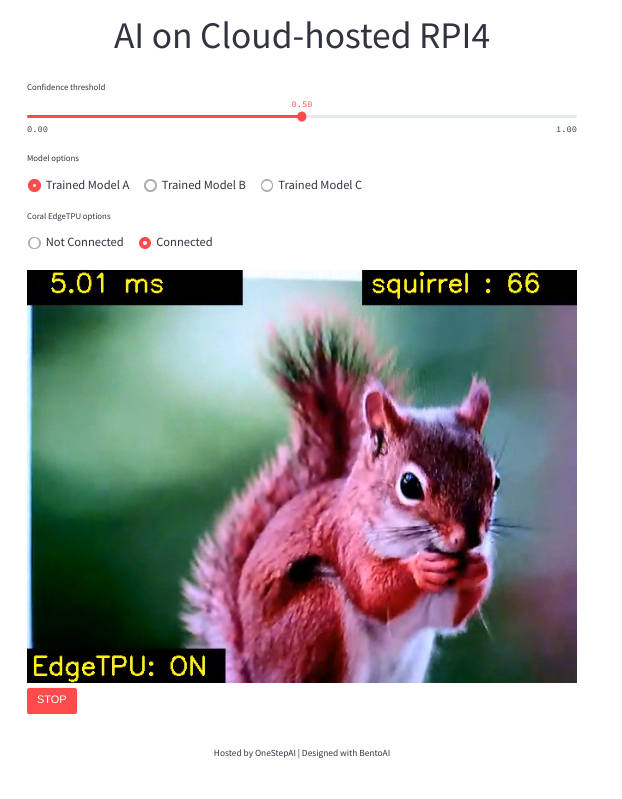

After I obtained my Google CORAL EdgeTPU model, I installed it on my “Cloud Hosted RPI4” and was able to conduct some further testing. Here I selected to reuse a “Streamlit App” I previously wrote with very little code needed.

You will note that the RPI4 also running on ONESTEPAI. That is part of the beauty of their new portal, as I never needed to run any code locally, not to mention that good luck finding an RPI4 at a reasonable price.

Next Steps

The technological advances by companies like Stable AI and OneStepAI provide new alternatives to other AI cloud leaders that aim their services and products at data scientists and other AI experts.

Creating a vision-based AI web application, including going from no data to a trained model in little time, is quite a feast. It takes a few hours to a few days for more professional results.

It stems from the superpowers imparted to you by “Stable Diffusion” and the “IoT AutoML”. It is incredible how far these “text to image” algorithms keep improving weekly.

My proposed approach is easy to duplicate and gives quick results, making AI more accessible to a broader range of interested parties.

While the results are highly encouraging, don’t expect instant, State-Of-The-Art (SOTA) results. I am encouraged by the initial results and have more to do to elevate my game. But, as it goes, I have other ideas to take the presented paradigm to another level.

Stay tuned and let me know what you would do with this approach or if you have other ideas 👋🏻.